Overview

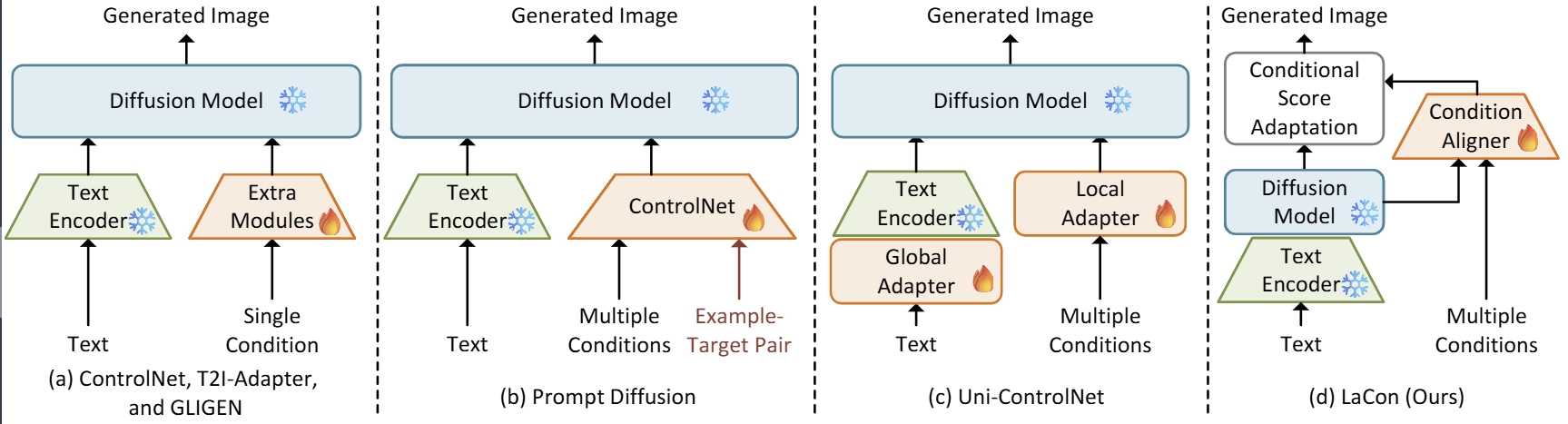

Diffusion models have demonstrated impressive abilities in generating photo-realistic and creative images. To offer more controllability for the generation process, existing studies, termed as early-constraint methods in this paper, leverage extra conditions and incorporate them into pre-trained diffusion models. Particularly, some of them adopt condition-specific modules to handle conditions separately, where they struggle to generalize across other conditions. Although follow-up studies present unified solutions to solve the generalization problem, they also require extra resources to implement, e.g., additional inputs or parameter optimization, where more flexible and efficient solutions are expected to perform steerable guided image synthesis. In this paper, we present an alternative paradigm, namely Late-Constraint Diffusion (LaCon), to simultaneously integrate various conditions into pre-trained diffusion models. Specifically, LaCon establishes an alignment between the external condition and the internal features of diffusion models, and utilizes the alignment to incorporate the target condition, guiding the sampling process to produce tailored results. Experimental results on COCO dataset illustrate the effectiveness and superior generalization capability of LaCon under various conditions and settings. Ablation studies investigate the functionalities of different components in LaCon, and illustrate its great potential to serve as an efficient solution to offer flexible controllability for diffusion models.

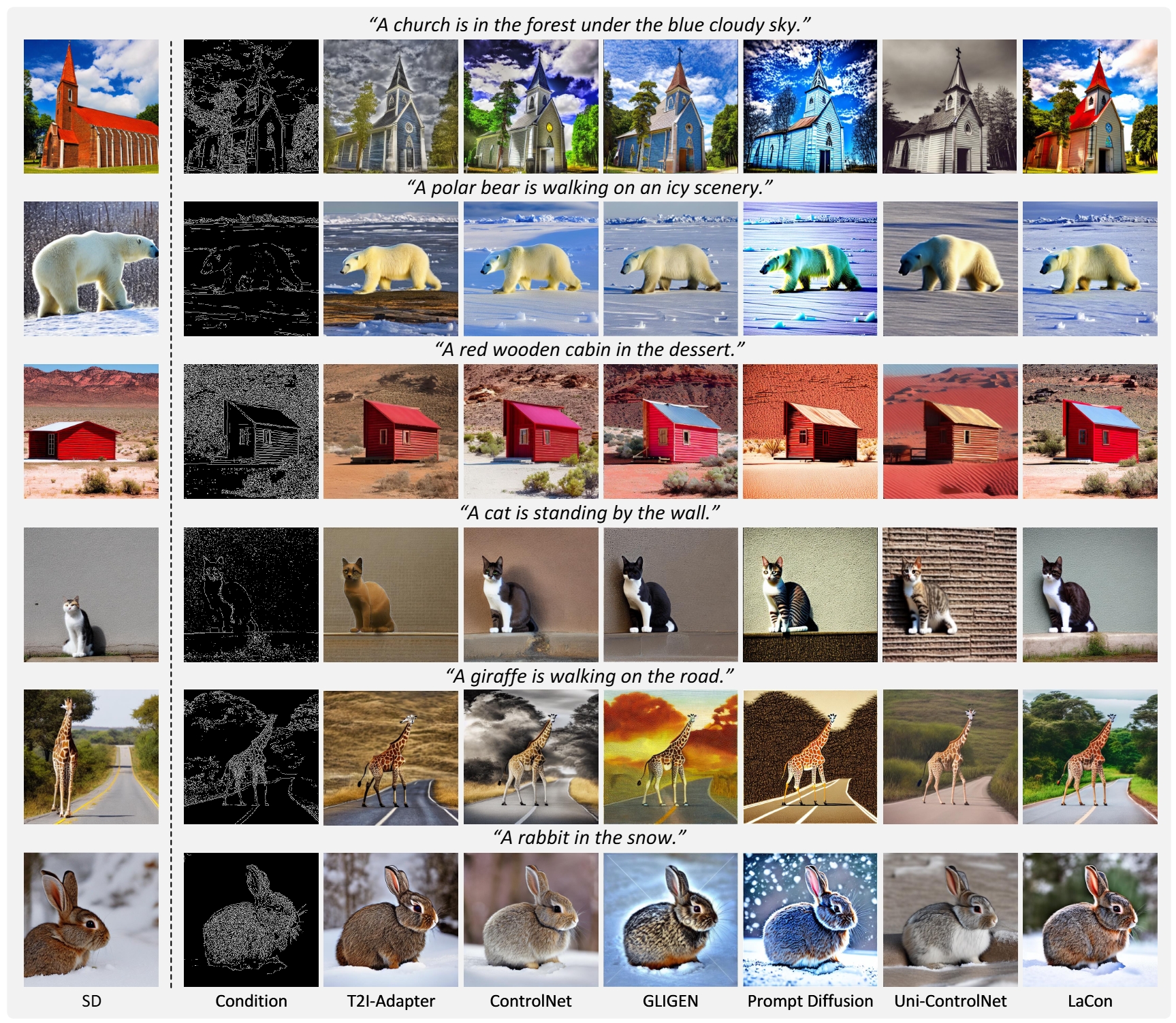

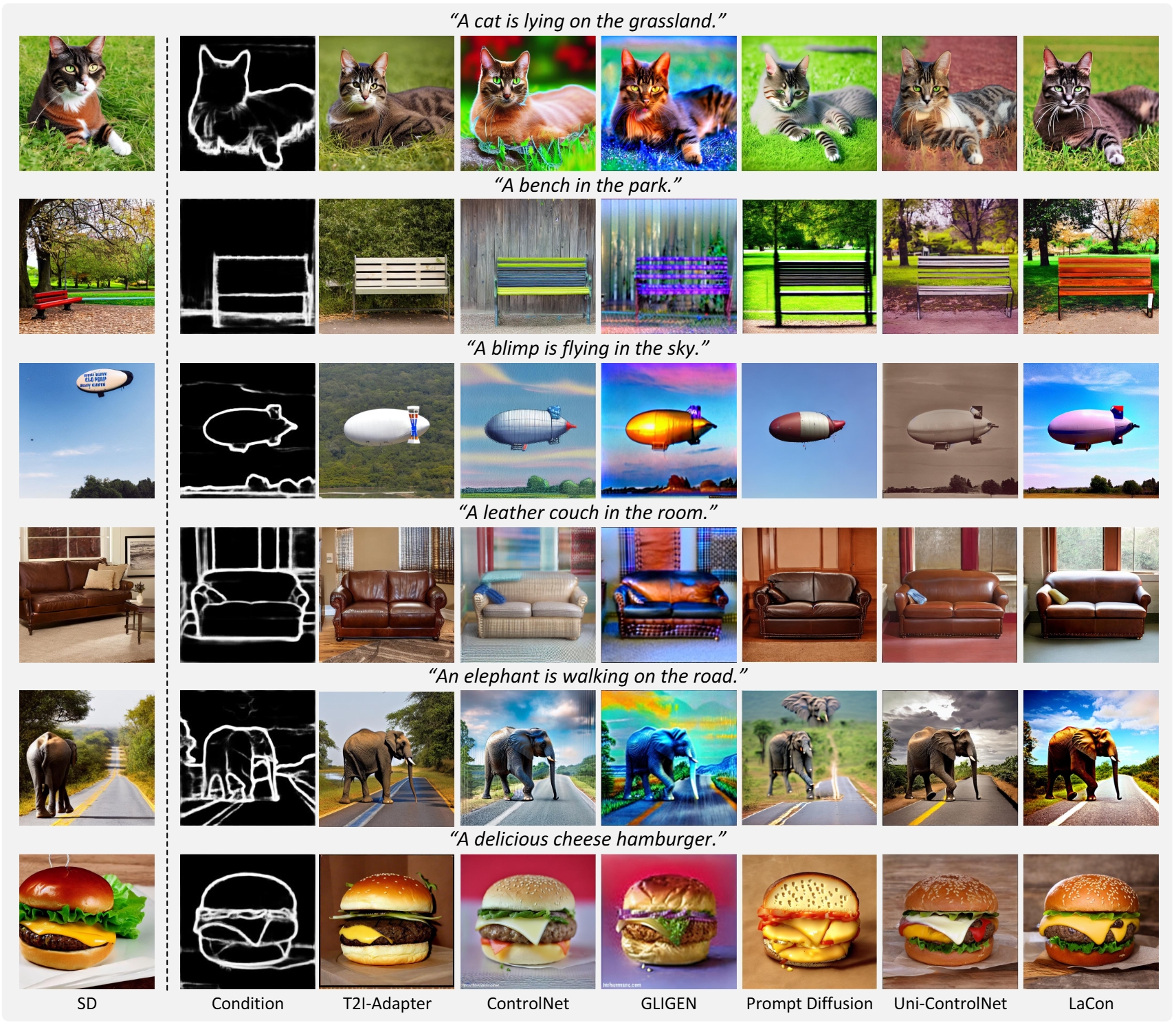

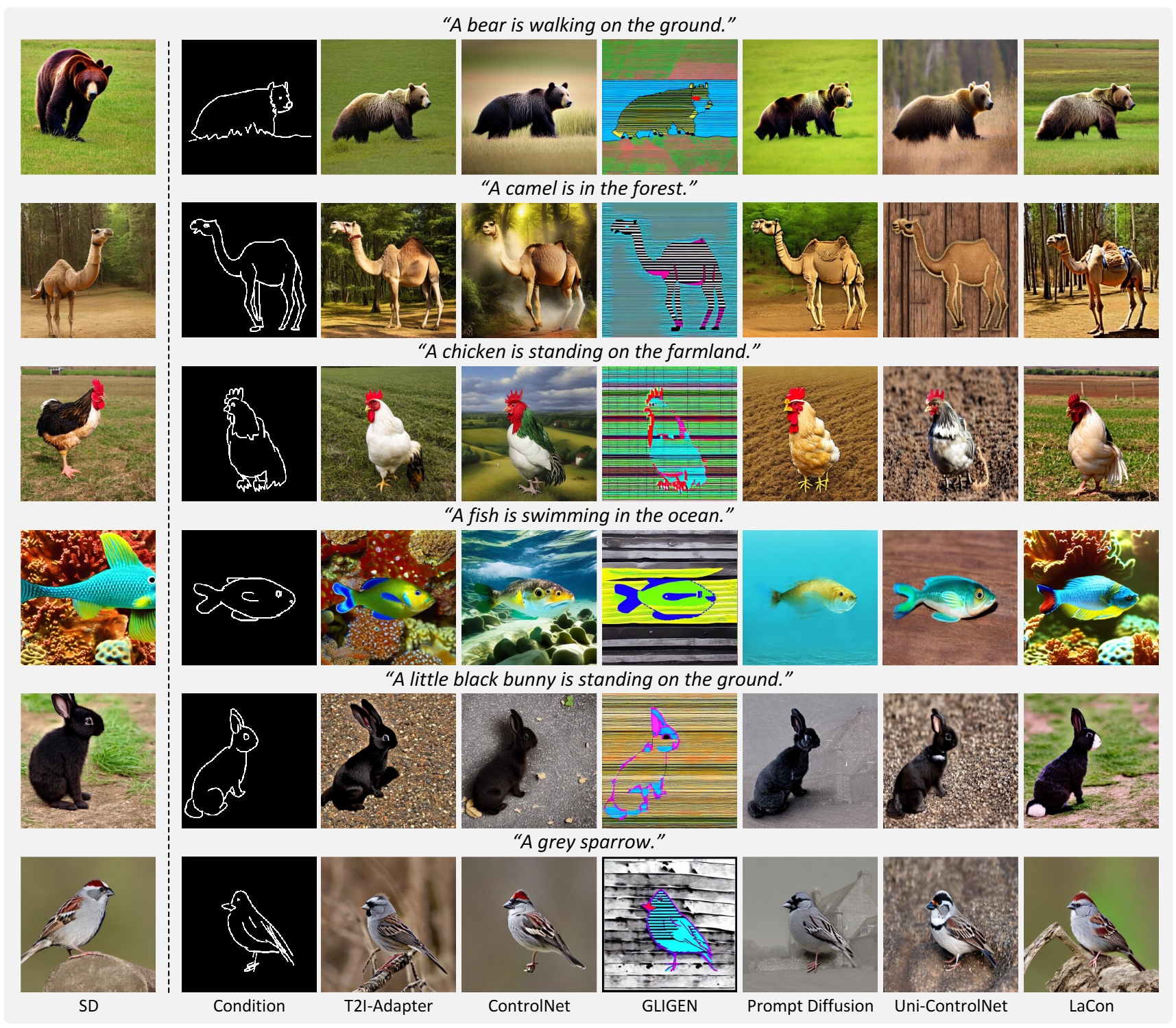

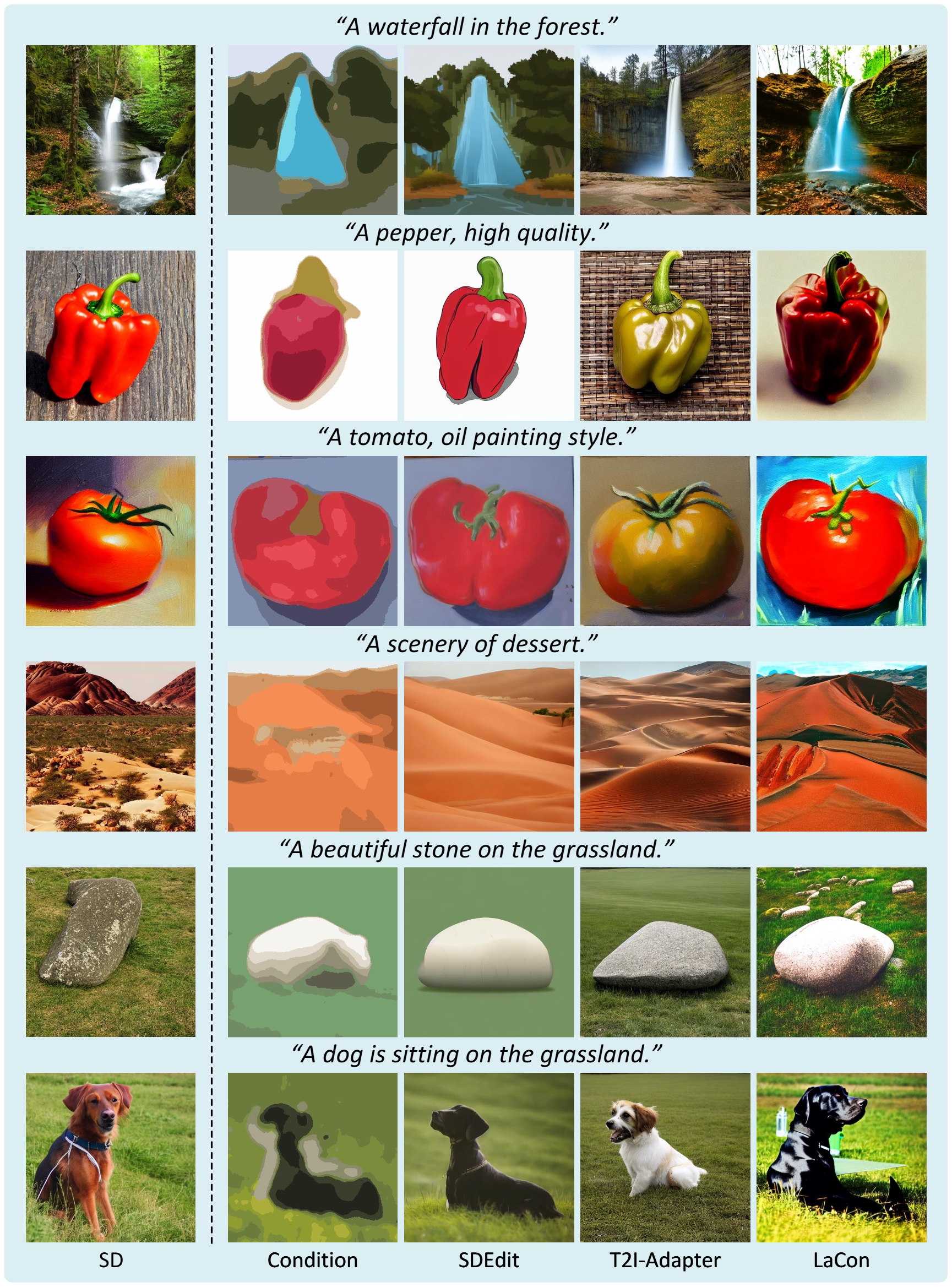

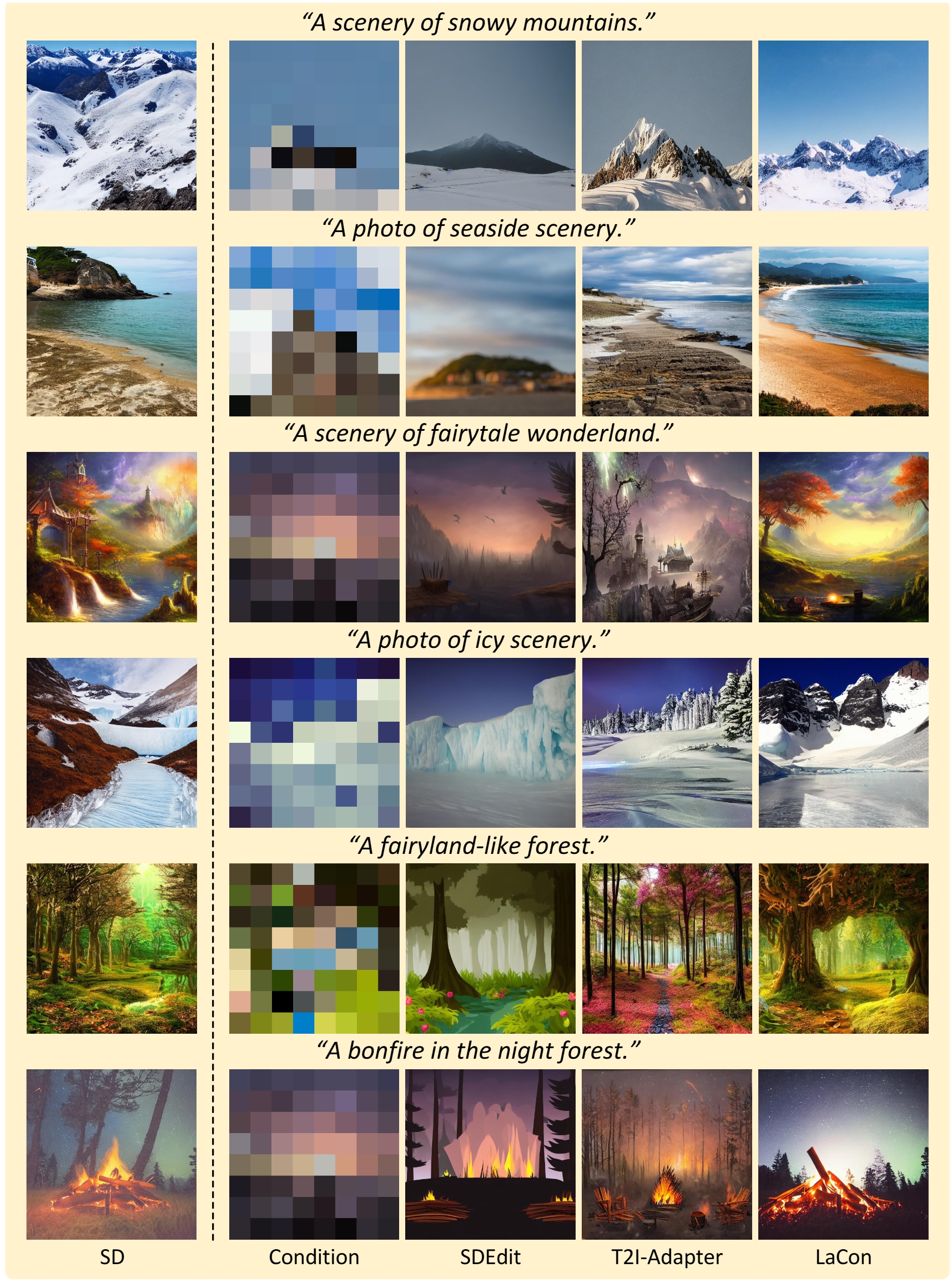

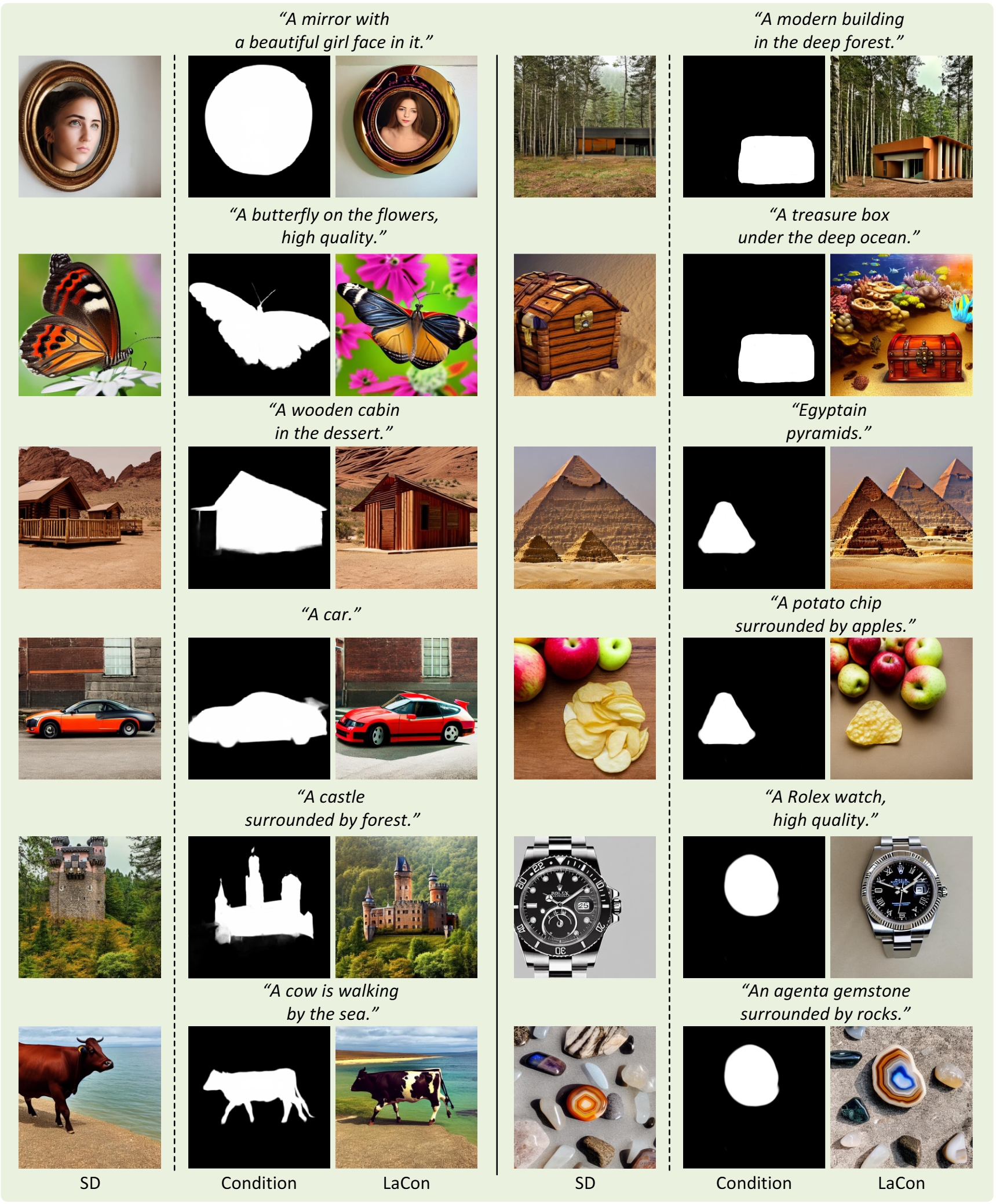

Qualitative Results

LaCon demonstrates promising performance when compared to state-of-the-art conditional generation methods.

Canny Edge

HED Edge

User Sketch

Color Stroke

Image Palette

Binary Mask

BibTeX

If you find our paper helpful to your work, please cite our paper with the following BibTeX reference:

@misc{liu-etal-2024-lacon,

title={{LaCon: Late-Constraint Diffusion for Steerable Guided Image Synthesis}},

author={{Chang Liu, Rui Li, Kaidong Zhang, Xin Luo, and Dong Liu}},

year={2024},

eprint={2305.11520},

archivePrefix={arXiv},

primaryClass={cs.CV}

}