|

Chang Liu I am currently a PhD candidate in University of Science and Technology of China (USTC), supervised by Prof. Dong Liu. I focus on applying foundation models to specific applications in an efficient, or even tuning-free manner. You can find more information about me in the links below: |

|

ResearchI am particularly interested in a wide series of tasks, e.g., image inpainting, conditional image synthesis, video-to-video editing, and large language model fine-tuning, with some of my representative works listed as follows. Some of them are highlighted. |

|

(🌟 Stars 130)

StableV2V: Stablizing Shape Consistency in Video-to-Video Editing

Chang Liu, Rui Li, Kaidong Zhang, Yunwei Lan, Dong Liu* arXiv, 2024 [Paper] / [GitHub] / [Project] This work focuses on the shape misalignment problem in video-to-video editing, where we propose a training-free paradigm to ensure the consistency between object motions and user prompts. Also, we collect an evaluation benchmark named DAVIS-Edit for the community. |

|

(🌟 Stars 32)

LaCon: Late-Constraint Diffusion for Steerable Guided Image Synthesis

Chang Liu, Rui Li, Kaidong Zhang, Xin Luo, Dong Liu* arXiv, 2023 [Paper] / [GitHub] / [Project] This work is motivated by the limited generalization ability in prevailing conditional image synthesis studies, where it perform the task by aligning the internal features of diffusion models with external control signals, and trains an efficient condition adapter for generalizable use. Promising performance, robust generalization ability, and superior efficiency are observed. |

|

(🌟 Stars 36)

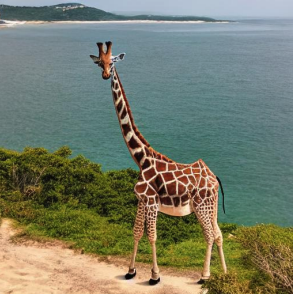

Towards Interactive Image Inpainting via Robust Sketch Refinement

Chang Liu, Shunxin Xu, Jialun Peng, Kaidong Zhang, Dong Liu* TMM, 2024 [Paper] / [GitHub] / [Project] This work aims to improve the use of real-world user sketches in the task of interactive image inpainting. Specifically, it utilizes a sketch refinement network to firstly calibrate the input sketches, and then projects it onto the feature space to obtain multi-scale alignment with the inpainting networks, eventually handling real user sketches more effectively. |

|

(🌟 Stars 47)

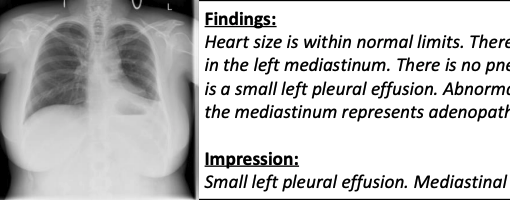

Bootstrapping Large Language Models for Radiology Report Generation

Chang Liu, Yuanhe Tian, Weidong Chen, Yan Song*, Yongdong Zhang, AAAI, 2024 [Paper] / [GitHub] This work aims to adapt large language models for the domain-specific application, i.e., radiology report generation, along with an In-domain Instance Induction (I3) and a Coarse-to-Fine Decoding (C2FD) strategies to efficiently fine-tune the LLM. |

|

(🌟 Stars 84)

A Systematic Review of Deep Learning-based Rearch for Radiology Report Generation

Chang Liu, Yuanhe Tian, Yan Song*, arXiv, 2023 [Paper] / [GitHub] This work offers a comprehensive review of deep learning-based research for radiology report generation, from perspectives of preresquisites, methology, datasets, evaluation metrics, and performance analysis. An open-sourced GitHub repository is maintained in order to summarize all related studies and resources in this task for the community. |